The Ethics of AI in Writing

When it comes to Artificial Intelligence, I’m a luddite. I’m analog over digital. Forget Pandora® and Spotify® or even CD’s. Vinyl LP’s rule them all. I grew up playing outside, climbing trees, chasing things, reveling over sticks – not joysticks, just sticks. If they look like a sword or a gun, even better. I’m a Labrador retriever, but literate. I have the tech-savvy of your average canine too. That’s because I’m Gen X. I was raised before the interweb, before social media and Netflix. I remember Atari, Nintendo, and Sega, and Alladin’s Palace. I slogged through the dial-up era. I even met my wife on Myspace. Rock on! When Sunday comes, I actually leave my house to go to church! I turn my phone off to listen to the sermon. And the sermon isn’t at 1.5x speed either. It’s at regular speed, and it takes forever. But that’s how I roll. There are some disadvantages to being an old-school luddite like me. But there’s one big advantage. We first learned about AI from The Terminator. We see artificial intelligence through the lens of Skynet killbots. We learned to fear it before we were ever tempted to love it.

We’re not surprised to find that ChatGPT, for example, poses some major threats to modern writing. It’s not all bad, of course. AI image-builders are great at stirring your creative juices. Writing engines can be a great research tool for summarizing big data into small bites. Long before ChatGPT hit the market spell-checkers and grammar assistants were helping to spot-clean our writing, on the fly. And I’m sure there is AI-tech is tracking down terrorists, blocking telemarketers, rejecting spam, and exterminating viruses. AI can be wonderful. But, technology can be used for good or evil, depending on how people wield it. So, when it comes to publishing, we should be aware of some of the ethical problems AI poses.

First, if you didn’t write it, you’re not the author.

The most glaring problem with AI writing is plagiarism. If you are writing a paper, and use AI to generate a sentence, a paragraph, or more, then that’s content you didn’t write. If you present that writing as your own, you are lying. That’s plagiarism. Ethically, you would need to report that AI program as a co-author. If you’re using AI to write your blog or online article, you should the least say: “Written with the assistance of AI/ChatGPT/etc.” And while that’s better than nothing, if that’s all you say about AI, it’s still misleading since you didn’t just use AI merely to fact-check or assist with research. The writing itself was produced by a writing-engine. So, you aren’t the sole author AI wrote a significant portion of the article, blog, or book while you are claiming sole authorship. In that case, AI didn’t just “assist” you. You two are co-authors. It’s misleading at best, and dishonest at worst, to claim authorship for written material that you didn’t author. Don’t be surprised then if publishers or professors reject your papers and accuse you of plagiarism if you ever claim AI writing as your own.

Second, if you didn’t learn it, you don’t know it.

AI is a Godsend when it comes to research. With AI you can get quick summaries, condense tons of information, and hunt down obscure quotes, authors, and books. I’m a big fan of AI as a research tool. But there’s a looming delusion with AI-infused research. People can radically overestimate their expertise to whatever extent they rely on AI to do the “thinking” for them.

Consider it this way. If you had a forklift and used it to lift thousand-pound loads, does that mean you’re strong? Of course not. A forklift is a tool for heavy lifting, and that’s fine. That’s what tools are for, to make work easier. But the machine did the hard work, not you. So you aren’t strong. The machine is. Now imagine you have a forklift, and not only do you use it to lift thousand-pound loads on the job site, but you also use it at your home gym to do your weightlifting. All your strength-training features you sitting in the driver’s seat, steering this forklift to move weights, pull loads, flip tires, push sleds, and carry you through the miles of jogging trail. You were using the forklift for exercise, so does that forklift now mean you’re strong? Still no. You’re no stronger, but likely weaker because that machine is taking over the hands-on work that you should have been doing to grow fit and strong. That’s how we often treat AI. Instead of wielding it as a tool in the hands of a skilled craftsman, it’s an artificial limb rendering us handicapped and codependent. AI, therefore, must be subordinated beneath the task of learning. It should function in service of our learning. As writers, publishers, and content creators, we should be learning about the subjects we’re writing about, we should be gaining experience and expertise. We do well, then, to take full responsibility for the learning task before us, so we’re not using AI to replace learning and knowledge with the appearance of learning substitute for learning and knowledge. Rather we should be using AI to help us learn and gain knowledge. At the end of the day, if you’re reposting AI content that you didn’t learn for yourself then you don’t know whether that content is correct, fair, or reasonable. If you didn’t learn it, you don’t know it.

Third, if you don’t lead it, you’re led by it.

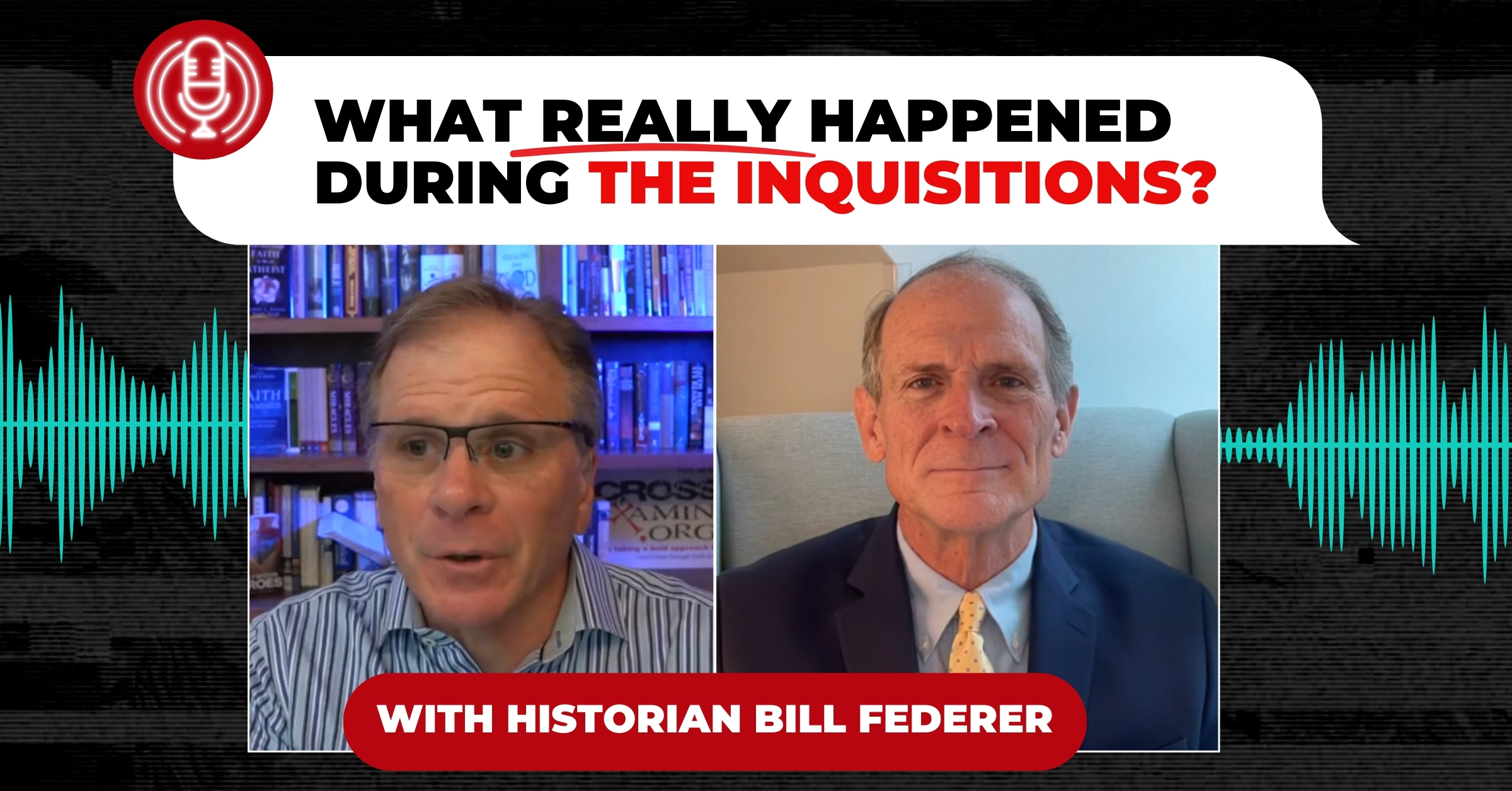

A third problem facing AI-usage is that it “has a mind of it’s own.” I’m not talking about actual autonomous life. We’re probably not at the point of iRobot or even Skynet. I’m talking about how AI isn’t neutral or objective, and it’s often laughably mistaken. If you followed Google’s “Gemini” launch fiasco then you know what I’m talking about. In February 2024, Google launched an AI-engine called “Gemini.” It could generate images, but never of white people. Apparently, it had been programmed to avoid portraying white people and, instead, to favor images of black people and other minorities. Allegedly, this is from a DEI initiative written into its code. So, if you asked for images of the Pope you might get one of these instead:

Now I’m not too worried about Gemini 1.0. I’m more concerned about the AI engines that are so subtle that you’ll never realize when they skew information in favor of a political narrative. For all writers, editors, authors, and content-creators, we need to do more than take credit for our content. We need to take responsibility for it too. That means we take leadership over the tools used in research, fact-finding, and learning. Instead of letting those tools lead us whichever direction they’re programmed to go, we decide for ourselves whether those directions are worth going, change course as needed, and refuse to let a Google algorithm determine what we are going to think or believe. Another way to say this is that we should expect that AI introduces some degree of slant and bias to the equation. So instead of trusting AI to tell the truth, and report events accurately, we need to keep a healthy dose of skepticism on hand and be ready to correct against our own biases and the bias we find in AI programming.

At an innocent level, an AI writing program might be biased in favor of formal writing – replacing all contractions like “aren’t,” “we’re” and “y’all” with “are not,” “we are,” and “youz guys.” At a more insidious level, AI can insert a decidedly partisan slant – especially when it comes to progressive political agenda items. It would be naïve to think that Google, Bing, Microsoft, etc. aren’t willing and able to let political and religious bias slip into the programming.

There’s No Going Back to the Stone Age

Now I may be a luddite, but I’m no fool. I understand that unless there’s a nuclear fallout, or something comparable, there’s no way we’re going back to the days of dot matrix printers and analog typewriters. We aren’t going back to the stone age as long as these time-saving tools are still functional. I write these warnings to you, not as a prophet but as a minister. I don’t foresee technological disasters crashing down on us. Rather I’m a hopeful Christian encouraging all of you aspiring writers out there to model academic integrity, write well, own your material, and grow through the writing process.

Oh, and Analog > Digital. Long live Vinyl!

Recommended Resources:

Correct not Politically Correct: About Same-Sex Marriage and Transgenderism by Frank Turek (Book, MP4, )

Stealing From God by Dr. Frank Turek (Book, 10-Part DVD Set, STUDENT Study Guide, TEACHER Study Guide)

Legislating Morality: Is it Wise? Is it Legal? Is it Possible? by Frank Turek (Book, DVD, Mp3, Mp4, PowerPoint download, PowerPoint CD)

Is Morality Absolute or Relative? by Frank Turek (Mp3/ Mp4)

Dr. John D. Ferrer is a speaker and content creator with Crossexamined. He’s also a graduate from the very first class of Crossexamined Instructors Academy. Having earned degrees from Southern Evangelical Seminary (MDiv) and Southwestern Baptist Theological Seminary (ThM, PhD), he’s now active in the pro-life community and in his home church in Pella Iowa. When he’s not helping his wife Hillary Ferrer with her ministry Mama Bear Apologetics, you can usually find John writing, researching, and teaching cultural apologetics.